- Home

- Federal Policies for Veterans

- VA Obscurantism

Senator Wicker Asks Important Questions About PTSD C&P Exam Quality

Senator Wicker asked important questions during a 2012 hearing of the U.S. Senate Committee on Veterans Affairs.1 Specifically, Senator Roger F. Wicker (R – Mississippi) asked the VA to:

"Describe how the VA conducts quality control of PTSD C&P exam results, and C&P examiner performances."

VA Responds with Eloquent Obscurantism

USAF Lt Col insignia

USAF Lt Col insigniaThis article critiques the VA's answer to Senator Wicker's questions.

By the way, Senator Wicker served for 28 years in the United States Air Force (active duty & reserves), Air Force Judge Advocate General's Corps, retiring at the rank of Lieutenant Colonel.

Table of Contents

VA's Response to Senator Wicker

Problem: The Audit Review Tool does not measure the quality of a C&P examination.

Analysis of Audit Review Criteria, Analysis of Questions 9–17

Summary of Audit Review Analysis

How is consistency between the medical evidence and the examination report assessed?

Senator Wicker's Question

Senator Wicker asked VA to:

"describe how the VA conducts quality control of PTSD C&P exam results, and C&P examiner performance."

The gist of VA's response is below.

VA's Response to Senator Wicker

VHA's Office of Disability and Medical Assessment (DMA) conducts quality reviews of VA Compensation and Pension (C&P) examination requests made by VBA and examinations completed by VHA clinicians. The Quality Management section, an integral component of DMA's quality and timeliness mission, is responsible for the collection and evaluation of VA disability examination data to support recommendations for improvement throughout the VHA and VBA examination process. The quality review program incorporates a three-dimensional approach consisting of an audit review process to assess medical-legal completeness, performance measures, and a review process to assess clinical examination reporting competence.

A mix of staff knowledgeable in both the clinical protocol/practices of the C&P examination process and staff with VBA rating experience perform the reviews. This monthly random sample can include all potential exam types. This quality review process started in October 2011, replacing the former C&P Examination Program that was discontinued in October 2010. Ongoing enhancements to data collection will provide VBA and VHA with detail data to support process improvement.

DMA is charged with improving the disability examination process by monitoring the quality of examinations conducted. Quality is monitored monthly using an audit review tool and the results are reported on a quarterly basis. This intense audit is conducted on all types of disability examinations, assessing consistency between the medical evidence and the examination report.

[Note: Please see the hearing transcript for the first paragraph of VA's response concerning VBA's STAR program, and the final paragraph, which discusses DMA's disability examiner registration and certification process.]

Analysis of VA's Response

In the following sections I analyze the constituent parts of VA’s statements and discuss concerns with each.

Quote #1 from VA's Response:

"The quality review program incorporates a three-dimensional approach consisting of an audit review process to assess medical-legal completeness, performance measures, and a review process to assess clinical examination reporting competence."

Problem: Ill-defined terminology

The term, "medical-legal completeness" is not defined in any VA documentation as far as I could discover. It is not a term of art in veterans law, disability medicine, forensic psychiatry, or forensic psychology. Therefore it is not clear what the phrase means.

The phrase "audit review process" presumably refers to the Audit Review Tool (subsequently renamed the Audit Review Criteria) which is addressed below.

Performance measures are not relevant to the Senator's questions, as they assess productivity, e.g., the percentage of exams completed within the required 30-day time frame. While important, productivity measures do not assess quality.

The phrase, "a review process to assess clinical examination reporting competence" is vague. Does it refer to the competence of the reporting process? Or the competence of the disability examination itself?

Quote #2 from VA's response:

"A mix of staff knowledgeable in both the clinical protocol/practices of the C&P examination process and staff with VBA rating experience perform the reviews."

Problem A: Misleading

One would think that the “knowledgeable” staff would be experienced C&P psychologists and psychiatrists. Unfortunately, that is not the case.

None of DMA staff who conduct these reviews are psychologists or psychiatrists. In fact, none of them are mental health professionals.

Problem B: Limited Relevance

Including “staff with VBA rating experience” might sound good on the surface. Unfortunately, such a background is not that relevant when it comes to reviewing the quality of a C&P exam.

Sure, a VBA staffer can help identify "ratability" problems, but how would they know if the evaluation methods, knowledge, integration of data, and reasoning of the examiner were consistent with professional standards?

A VBA Rater (Rating Veterans Service Representative or RVSR) does not conduct C&P examinations, and they are not health care professionals. They know how to obtain information from an exam report for rating purposes, but they do not possess the requisite education, training, and experience to evaluate the overall quality of a C&P exam for PTSD or other mental disorders.

Quote #3 from VA's response:

"DMA is charged with improving the disability examination process by monitoring the quality of examinations conducted. Quality is monitored monthly using an audit review tool and the results are reported on a quarterly basis."

Problem: The Audit Review Tool does not measure the quality of a C&P examination.

The Audit Review Tool is used to determine if an exam report is "sufficient for rating purposes" only, and the review is not conducted by psychologists or psychiatrists.

Questions 1–8 are used to evaluate VBA staff—did they request a C&P exam properly? Thus, the first eight questions are not relevant to PTSD exam quality.

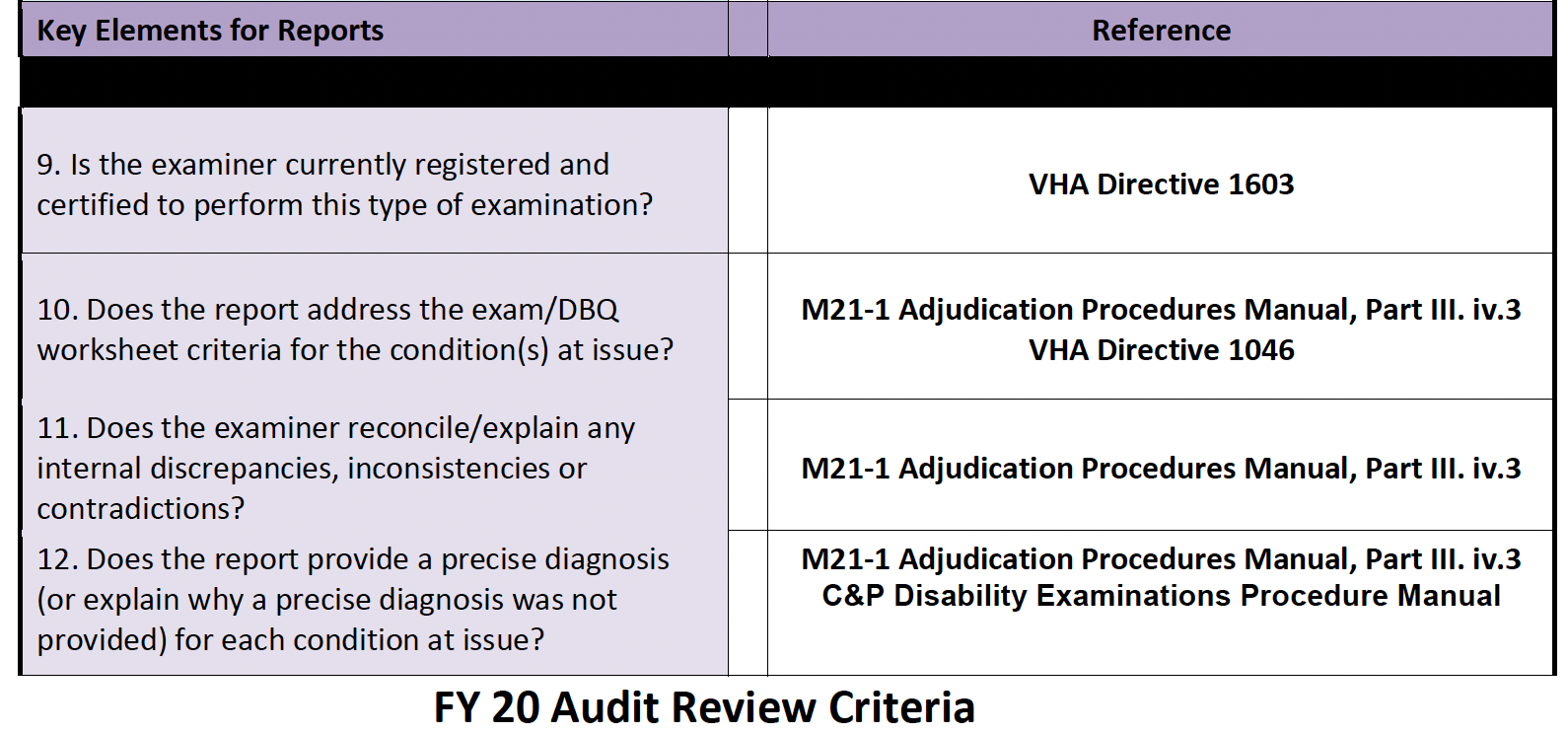

The following questions (numbers 9 through 17) are from a more recent iteration of the Audit Review Criteria—the FY2020 Audit Review Criteria—but they are quite similar to questions 9–17 on the FY2012 Audit Review Tool in effect at the time Senator Wicker asked his questions about PTSD exam quality.

FY2020 Audit Review Criteria

The images below are screen shots of the Fiscal Year 2020 Department of Veterans Affairs Audit Review Criteria, questions 9-17. Questions 1-8 are used to evaluate VBA staff. Questions 9-17 are used to evaluate C&P exams.

Click here to download the FY2020 Audit Review Criteria (PDF).

Audit Review Criteria: Analysis of Questions 9–17

9. Is the examiner currently registered and certified to perform this type of examination?

Comment: Examiner qualifications are important to check, although VBA staff could (and perhaps already do) confirm examiner registration and certification.

Plus, this question does not address the quality of the exam itself. It asks only if the examiner possesses the minimum qualifications for conducting C&P exams.

10. Does the report address the exam/DBQ worksheet criteria for the condition(s) at issue?

Problem A: Ambiguous and imprecise. For example, what are "exam/DBQ worksheet criteria"? If you search for the term as written, you will find my website and that's about it. If it were a standard term, Google would find all sorts of web pages and documents where the term appears.

Problem B: This item references VHA Directive 1046, which "outlines VHA policy for the performance of Compensation and Pension (C&P) disability examinations ...."

VHA Directive 1046 does not say anything about DBQs, worksheets, or exam/DBQ worksheet criteria.

Problem C: The Audit Review Criteria document contains a table - the right column contains references for each of the Audit Review Criteria items. For question 10, the other reference (along with the VHA Directive 1046) is:

M21-1 Adjudication Procedures Manual, pt. III, subpt. iv, chap. 3

That M21-1 Manual chapter in turn contains six sections, and each section contains between two and 10 subsections.

In other words, this M21-1 chapter contains a lot of material.

One might reasonably ask, exactly which subsection(s) or sections of chapter 3 pertain to Question #10? Your guess is as good as mine.

Citing a work that contains voluminous material defeats the purpose of a citation. As the Chicago Manual of Style puts it, "source citations must always provide sufficient information ... to lead readers directly to the sources consulted ...."

11. Does the examiner reconcile/explain any internal discrepancies, inconsistencies or contradictions?

Problem: Since the reviewers are not mental health professionals, they may not recognize many such discrepancies or contradictions.

In addition, without education, training, and experience in psychiatry or psychology, how do reviewers know if an examiner's explanation is well-founded?

12. Does the report provide a precise diagnosis(or explain why a precise diagnosis was not provided) for each condition at issue?

Problem: Since the staff reviewing exam reports are not mental health professionals, how would they know whether or not a "precise diagnosis" has been assigned for "each condition at issue".

For example, if a veteran files a disability claim for PTSD and the examiner assigns diagnoses of "Unspecified Depressive Disorder" and "Unspecified Anxiety Disorder", how does the reviewer know whether or not those diagnoses are "precise", from a scientific or medical perspective?

And how would a reviewer know if the diagnoses were properly applied to "the condition at issue"?

A psychologist or psychiatrist would recognize that these diagnoses are by their nature not precise, and could represent an inadequate evaluation.

In addition, for a PTSD claim, the examiner should at least consider the diagnosis of "Other Trauma- and Stressor-Related Disorders" (which is a common diagnosis for subsyndromal post-traumatic stress), and explain why that diagnosis did not apply.

13. If there is a change in the diagnosis of a service connected condition, did the examiner provide an explanation or rationale for the change?

Note that the reviewer (appropriately) does not evaluate the examiner's explanation or rationale for accuracy. The reviewer simply checks a "Yes" or a "No" box.

Problem: VA does not evaluate the quality of the examiner's explanation or rationale.

14. If the examiner documented the presence of a condition requiring immediate medical care or further evaluation, was there documentation that the Veteran was notified?

Comment: A good question! It doesn't directly assess exam quality, but it's so important that it ought to be checked.

15. If a medical opinion was requested, did the examiner indicate that the claim file, VBMS, and/or Virtual VA were reviewed in conjunction with any other records?

Problem: An examiner stating that he or she reviewed the claims file is one thing; reviewing the file thoroughly, analyzing carefully, and integrating information from the records review into a coherent understanding of the veteran, is another thing entirely.

16. If a medical opinion was requested, was the requested medical opinion provided? and 17. If a medical opinion was requested, was a rationale provided for the requested medical opinion?

Problem: These two questions (appropriately) do not ask the reviewer to evaluate the adequacy of the medical opinion and its rationale, they simply ask if the examiner wrote one. (Another yes-or-no checkbox item.)

A genuine quality assurance program would have C&P psychologists evaluating medical opinion and rationale adequacy.

Summary of Audit Review Analysis

Thus, it is not much of an exaggeration to say that the current review process consists of glancing at the DBQ to see if the examiner filled in all the required fields.

Granted, the information checked via the Audit Review process is important with regard to the ratability of the exam report.

But the Audit Review process does next to nothing to evaluate exam quality.

In other words, a computer program could fill out a DBQ, based on random but coherent-sounding information, making sure all the appropriate boxes were checked and fields filled with text, and, even though the information would have nothing to do with the veteran's actual symptoms or functional impairment, the Audit Review process would find the report acceptable.

Quote #4 from VA's response:

“This intense audit is conducted on all types of disability examinations, assessing consistency between the medical evidence and the examination report.”

Problem with VA's statement (Quote #4)

Problem: The statement does not correspond with what VA actually does.

How is consistency between the medical evidence and the examination report assessed?

It's not.

Since the reviewers are not psychologists or psychiatrists, they do not possess the requisite education, training, and experience to understand and assess the relevant medical evidence.

Consequently, the reviewers cannot assess the consistency between the medical evidence and the examination report.

Conclusion

VA provided an eloquent response to Senator Wicker that slides across the page so gracefully, you almost want to believe everything it claims, even if you know better.

But since you do know better, and since no one wants to let someone's silver-tongued prose cast a spell over them, you can decide for yourself if VA responded completely and honestly to Senator Wicker's astute query.

My conclusion: VA's response to Senator Wicker epitomizes well-crafted obscurantism.

What is obscurantism?

My favorite description of obscurantism is from Buekens & Boudry (2015):

"The charge of obscurantism suggests a deliberate move on behalf of the speaker, who is accused of setting up a game of verbal smoke and mirrors to suggest depth and insight where none exists. The suspicion is, furthermore, that the obscurantist does not have anything meaningful to say and does not grasp the real intricacies of his subject matter, but nevertheless wants to keep up appearances, hoping that his reader will mistake it for profundity."

- Filip Buekens and Maarten Boudry, “The Dark Side of the Loon. Explaining the Temptations of Obscurantism,” Theoria 81, no. 2 (2015): 126. https://doi.org/10.1111/theo.12047

Here are some definitions of obscurantism from good dictionaries:

“Deliberate obscurity or evasion of clarity.” - Random House Kernerman Webster’s College Dictionary, https://www.thefreedictionary.com/Obscurantists

“Opposition to inquiry, enlightenment, or reform ….” - Oxford English Dictionary, 3rd ed., Oxford University Press (2004, rev. 2018), https://www.oed.com/view/Entry/129832

“A policy of withholding information from the public.” - American Heritage Dictionary of the English Language, 5th ed. (2018), https://ahdictionary.com/word/search.html?q=obscurantism

Footnotes

1. VA Mental Health Care: Evaluating Access and Assessing Care, Hearing before the S. Comm. on Veterans’ Affs., 112th Cong. 20–22 (2012) (response to posthearing questions by Sen. Wicker to William Schoenhard, Dep. Under Sec’y Health Operations & Mgmt., Veterans Health Admin.), https://www.gpo.gov/fdsys/pkg/CHRG-112shrg74334/pdf/CHRG-112shrg74334.pdf

PTSDexams.net is an educational site with no advertising and no affiliate links. Dr. Worthen conducts Independent Psychological Exams (IPE) with veterans, but that information is on his professional practice website.

You are here:

Subscribe to receive new articles and other updates

What Do You Think?

I value your feedback!

If you would like to comment, ask questions, or offer suggestions about this page, please feel free to do so. Of course, keep it clean and courteous.

You can leave an anonymous comment if you wish—just type a pseudonym in the "Name" field.

If you want to receive an email when someone replies to your comment, click the Google Sign-in icon on the lower right of the comment box to use Google Sign-in. (Your email remains private.)

↓ Please comment below! ↓